Fastbrain was a prototype game that I created for a client at UC Davis. The goal of the project was to translate the client’s research and cognitive therapies into a children’s video game.

My client, a cognitive neuroscientist, was researching interventions for a group of developmental disorders, such as 22q.11 deletion syndrome, Williams syndrome, and Turner syndrome. The disorders he was looking to treat all affect the subject’s visual perception. Individuals with any of these disorders have visual cortices which can’t process smaller visual details as well as typically functioning brains. As a result, they perceive the world in “lower resolution” and with less detail, even if their eyes are perfectly normal. Children who grow up with these deficits often suffer from dyscalculia later in life.

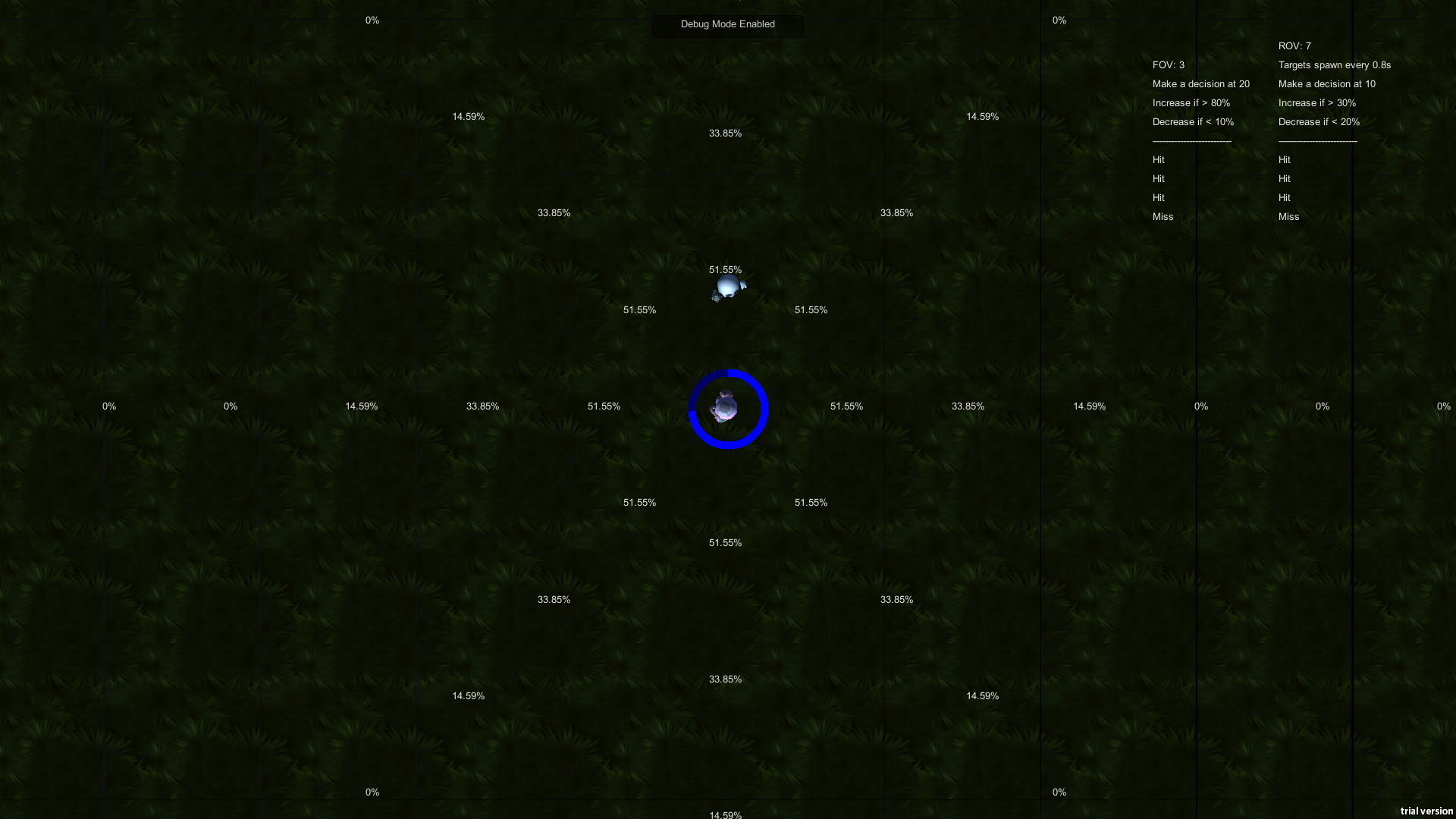

My client’s intervention required patients to see and respond to stimuli in their visual periphery. With his input, I designed a simple top-down shooter that challenged players to zap targets that appeared around the character in the center of the screen. When a target appeared, the player tilted a thumbstick in the correct direction to fire. The simplicity of the input reduced the cognitive load of responding to the stimulus and kept the data we collected about the player’s performance relatively “clean”. We then fed this performance data into an adaptive difficulty algorithm which determined where, and for how long, each target would appear. My client and I designed that algorithm with the goal of optimally stimulating the brain, and grow the portion of the patient’s vision where they could perceive smaller details.

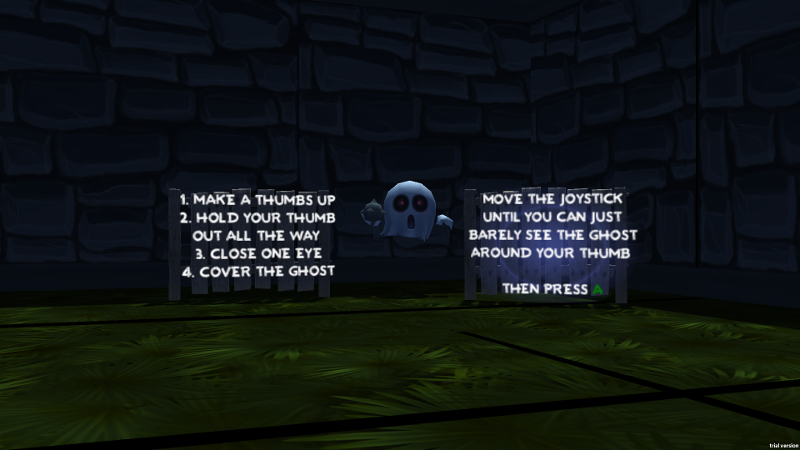

One of the more fun challenges I faced in creating Fastbrain was designing the game’s calibration feature. In order for the therapeutic aspect to be effective, the game needed to understand how much of a player’s vision an object would take up. The so-called “angular size” of an object is a function of its physical size and its distance from the viewer. Since Fastbrain was designed to be played on a screen of any size, by a player sitting an unknown distance away from it, we needed a clever way to determine those data points. The solution came from the field of anthropometrics. A typical human’s arm length is closely related to the size of their hands and their fingers. For the average person, if they hold up their thumb at full arm’s length, their thumb subtends about 2 degrees of visual angle. Using this fact, we designed a brief calibration scene where we asked the player to make that thumbs up gesture and resize a game object until it was just hidden by their thumb. From there, we were able to determine exactly what the “angular size” of every object in the game was.